Prompt Images

The Man Behind the Man Behind Trump

Immediately after his recent ouster from the Trump White House, Steve Bannon met with the man who had helped his own fortunes rise in the last few years: Robert L. Mercer—a millionaire going on billionaire—whose monetary contributions to various right-leaning political causes now rival those of the Koch brothers.

In a fascinating account of Mercer, published earlier this year in The New Yorker, Jane Mayer describes how Mercer first came to know Steve Bannon through their mutual friend Andrew Breitbart—the namesake of Breitbart News. Before long, Bannon was guiding the invisible hand of Mercer’s wealth to all sorts of pet projects.

After Breitbart’s unexpected death, Bannon stepped in to helm the site, and with the help of a $10 million investment from Mercer, grew it into a flagship site of the alt-right. And when Bannon left Breitbart to become Trump’s campaign manager, he brought Mercer’s super PAC along with him.

The logic here is simple: Bob’s money enabled Steve’s strategic vision, which itself enabled a reality show buffoon to become president.

The natural question is, then, what’s the proximate cause of Mercers success? Who or what brought Robert Mercer all that considerable wealth?

The answer to this question, perhaps surprising, winds back in time to an advance in a niche field of artificial intelligence. Back in time before machines began to dominate all our favorite games: checkers, chess, Jeopardy, and Dota 2.

Back, even, to a time before the internet as we know it.

Lost in Translation

Artificial intelligence has been around since the 1930s. Immediately after Alan Turing and others had invented the very concept of a computer, there was the idea that humans might eventually build machines that could think for themselves.

By the 1980s artificial intelligence was dominated by ‘expert systems.’ An expert system was the kind of AI you ended up with when you followed your intuition: create a list of rules for how the machine should behave in any given circumstance, an endless list of IF this THEN that statements.

In expert systems, the programmer creates the rules and the computer just implements them. You want a machine that can prove theorems? Easy, just program in the rules of algebra (using, of course, a set of IF/THEN statements) and feed the computer some variables. You want a machine that can converse with you? Easy, just program in the rules of grammar and feed the computer some words.

There’s another approach, though, to such tasks. This approach is less about pre-programmed rules and more about recognizing patterns in data. This approach—more statistical than logical—has come to dominate artificial intelligence these days, powering everything from the algorithms that Netflix uses to decide which of their many superhero shows you’d most enjoy, to the algorithms that Amazon uses to make the concept of a microphone that is always on and listening to your conversations kinda, you know, endearing.

I own an Amazon Echo and find Alexa charming. My wife wants us to get rid of her.

We all have an intuitive sense of how expert systems work; it’s essentially how we learned things in elementary school. But the statistical approach to learning is less intuitive, despite the fact that it’s actually much closer to how we actually learn just about everything outside of a classroom.

The statistical approach is more akin to how children learn language in the first place. No one sits a one year-old down and runs through an exhaustive list of vocabulary words to be memorized as soon as they can form sounds. No parent ever teaches a baby the concept of a “past participle.” Instead they just talk—to the child, around the child, about anything and everything really. Sometimes they point, other times not. And somehow the child eventually grasps relationships between words and people, words and actions. The relationship isn’t purely logical—it’s statistical. “Mom” comes to have meaning not by logical deduction, but because people keep using that word in her presence. Of course people use other words in her presence too, but “mom” is the one spoken most frequently.

Though the statistical paradigm existed in the late 1980s, it was overshadowed by expert systems. Where the expert systems merely required enough time to code up a tedious cacophony of “rules” that the system would be expected to follow, the statistical approach required data. Information from which to glean patterns. Not just a little data, either. A ton of the stuff. And in the 1980s, when a floppy disk wasn’t enough to store a single cat meme, data was a scarce resource.

Still, some researchers believed that the future of AI lay in the statistical paradigm, not in expert systems. These folks pressed on to find, sort and enter data—tedious bit by tedious bit.

In 1988, a research team at IBM succeeded in building a machine that automatically translated between different languages using the statistical technique. The results were impressive, besting state of the art expert systems and ushering in a new era in machine translation that would last for decades.

The approach the IBM team took was rather simple. Forget exhaustive lists of words. Forget grammar. Immerse the machine in a sea of data and let it sink or swim. In this case, the data were transcripts of Canadian parliamentary proceedings. Since it was Canada, the transcripts existed in both English and French: test and answer key generated by a quirk of British Commonwealth bureaucracy.

Instead of predetermined rules for how to translate words between languages, the IBM team built a system that could automatically detect patterns between the texts, without being given any predefined rules of grammar. Over time the system created its own internal rules, statistical rules, for the probability that a given word in English would correspond to a given word in French (or vice versa):

bonjour and hello show up in the same sentences 99 percent of the time

plume and pen show up in the same sentences 87 percent of the time

Given only a few sample translated sentences, such a technique wouldn’t learn much. But give it decades worth of boring Canadian parliamentary proceedings, as the IBM team did, and suddenly you have a translation technology worthy of the name Babelfish.

In 1988, the IBM team published their findings in the Proceedings of the 12th International Conference on Computational Linguistics. Their paper, A statistical approach to language translation, listed 7 authors. The sixth author on the paper is a name that should be familiar: Robert L. Mercer.

The Edge

In 1993, Bob Mercer received a job offer from Renaissance Technologies, a hedge fund. In her New Yorker piece, Mayer writes that Mercer threw the job offer away because he’d never heard of Renaissance. He wasn’t the only person. Renaissance was a secretive hedge fund that had been created by the mathematician James Simons in the early 80s. Simons was 40 at the time, having spent decades in academia where he left behind a new field of mathematics that would eventually be picked up by physicists studying string theory.

Some folks buy new cars to make sense of that transition to midlife. But for his midlife crisis, Simons built the most profitable hedge fund that has ever existed.

It isn’t hard to imagine how someone with Mercer’s skills would be useful at Renaissance. Mercer was part of a team that taught a machine to figure out, for itself, highly complex relationships between two data sources: English texts and French texts. If instead you turned that sort of machine on the stock market and asked it to find—for itself—complex relationships between the prices of AAPL and MSFT, well, you could use that information to make some real money.

Renaissance persisted with its offers, and eventually Mercer, then making a modest salary at IBM and “struggling” with the typical costs of upper middle class life, relented.

At present, Mercer’s own investments in the hedge fund where he works, plus whatever generous salary he now earns as president of Renaissance (Simons retired several years ago) has him closing in on $1 billion in wealth.

Fascinating (or cringe-inducing, depending on your views of Wall Street) as Mercer’s rise from sixth author on an obscure scientific paper to head of the most profitable hedge fund in the history of capitalism may be, it’s Mercer’s politics—and where he decides to put his money—that is most interesting.

Mercer is a libertarian who appears to believe nothing good can come from the state. And according to sources that Mayer spoke with, he also appears to have a conspiratorial mindset often found on the extreme ends of the political spectrum. Former employees at Renaissance claimed Mercer believes the Clintons had murdered political opponents; that the U.S. should have taken the oil while invading Iraq, “since it was there;” and that blacks were better off before the 1964 Civil Rights Act was passed.

Over the last decade, Mercer has been pouring his financial resources into causes that back his libertarian/far-right ideology. He contributed to the winning side of the Citizens United case in 2009. During the 2016 presidential primaries Mercer donated $15.5 million (75 percent of the PAC’s total contributions) to Make America Number 1—a super PAC run by Mercer’s daughter Rebekah.

When Ted Cruz, Make America Number 1’s first choice for president, dropped out of the race, the super PAC—and by extension Robert Mercer—trained its considerable finances on getting Donald J. Trump elected to the highest office in the nation.

Learning Machines

The earliest versions of computers were essentially giant calculators. A computer was just a means for accomplishing some recipe of numerical operations: add this, subtract that, divide by this, integrate over that. Over time, the logic got more and more complicated, and using software, you could adjust the recipe of operations more easily. But at the end of the day, the goal was to feed in data and get the machine to spit out ‘the answer.’

In artificial intelligence (or machine learning as it’s now often called) the goal isn’t to create, a priori, a recipe to calculate answers for a given input. The goal is to show the machine some ‘input’ and desired ‘output’ (i.e., the answers) and let it learn its own rules for matching up the two.

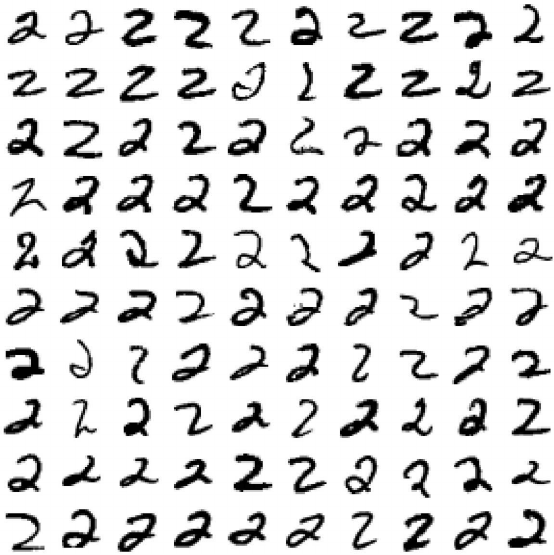

Suppose you’d like to teach a machine to recognize a handwritten numeral 2. If you were designing an expert system to do this, you’d start with a set of rules the machine could follow to detect 2s. But what would such rules even look like? Take a moment and try to define the rules for “drawing” a 2, let alone recognizing one.

Expert systems like this are brittle, because even if you succeed in programming a machine to detect a lot of hand scrawled 2s out in the wild, you will always encounter some example that doesn’t conform to your nice little rules.

Hey asshole, thanks to your little squiggle my expert system has no chance of recognizing this as a two.

No expert systems aren’t so good at this sort of task. Better to use a statistical technique a la Mercer’s translation system. So how do you do this?

Easy, you just show the machine a million hand scrawled numbers with an answer key :

Hey computer, these are all examples of “2”—got it?

The machine has some general purpose algorithms that it then uses to learn “patterns” for itself. Basically, it generates some rules and then checks to see if those rules produce the desired “answer” (i.e., the rules should tell it that all the examples in the above image are in fact a 2).

Most likely, the machine’s first attempt at coming up with some rules will suck. So it goes back to the drawing board and produces some new rules based on the feedback it got from its previous attempt. It will often do this a million more times. Over time it learns, for itself, how to identify the number 2 (or any other number or letter) from the almost infinite number of variations of slanted and curved lines.

That is, it generates, by trial and error, some robust set of internal rules so that whenever it gets a new hand-scrawled number it can accurately identify the number as either being a 2, or being “not a 2.”

What are those internal rules the machine eventually learns to identify the number 2? It doesn’t matter. They wouldn’t make sense to us anyways.

The point is that we now have a machine that can accurately identify a 2. The same sort of technique may then be used to have it learn any other numbers or letters, or to have it learn the best strategy for playing StarCraft. Or even, to have it learn how to take and hold the highest office in the most powerful country in the world.

Political Machines

Robert Mercer’s whole life has been about building machines that were fed in some data and learned, for themselves, how to get the answers he wanted: a quality machine translation, a superior stock pick. The internal logic that the machines developed to achieve their goals was besides the point. The ends justified not being able to make any sense, whatsoever, of the means.

In turning his attention to building a political machine, Mercer seems to have taken a similar approach. While the Kochs backed mainstream GOP candidates, with their brittle IF this THEN that ideologies, Mercer bet on the candidate who could adjust his own internal logic on the fly. A candidate who could read the crowds—and recognize in them patterns that could be leveraged to guide the country towards an end made in Mercer’s own peculiar worldview.

Of course, training any machine requires providing it with an answer key: correct translations, great stock picks from the past. How does Mercer make sure Trump is being fed the (alt-)right answers on immigration, trade policy, and taxes now that Bannon is no longer in the White House?

Remember when Google built a machine that watched hundreds of hours of YouTube videos and became completely obsessed with cats—despite not knowing what a cat was before the experiment started? Well it did.

If you now replace YouTube with Breitbart TV (which Bannon hopes to position as a rival to Fox News) and Google’s AI with Donald Trump—you have your answer.

What replaces ‘cat,’ though, is anyone’s guess.